The headline “Government votes that animals can’t feel pain or emotions” suddenly cropped up – and soon became a hugely read story. At first, mainstream media outlets didn’t cover this piece of non-news, which centered around the government omitting mention of animals as sentient beings in its Brexit bill. (Essentially, there was concern that some animal welfare legislation wouldn’t get carried over after the U.K.’s withdrawal from the EU – the government later clarified that animals can indeed feel pain.)

It didn’t take long for this story – which began on sites such as The London Economic, a progressive news website, and Wild Welfare, an animal welfare organization – to spread to other digital sites and even mainstream operations, such as The Independent. Beard says a survey at the time showed 40% of U.K. voters under 25 had seen this news. However, as some outlets eventually reported, the story wasn’t true.

“It had been taken totally out of context,” he explains. This and a few other stories made his team realize that even the smallest piece of so-called fake news – misinformation, which is wrong or misinterpreted information, or disinformation, which is wrong on purpose – could sway public opinion. At one point, his team found 50 different false narratives about the government and its actions.

The threat was so severe that in 2019, the U.K. government created what is believed to be the first ever rapid-response unit to track and combat online misinformation, specifically targeting stories that could affect how the government runs or the well-being of its citizens. Beard was recruited onto the team.

Mounting misinformation

The response team couldn’t have come at a better time. Over the last few years, online misinformation has increased exponentially. During the 2016 U.S. election one study found 38 million shares of deliberately fake news, which led to 760 million user clicks. It also discovered fake news sites received 159 million visits in the month around the November election.

The problem has escalated even more in recent years with fake or misleading news stories popping up on everything from COVID-19 to the Ukraine war and even to Queen Elizabeth’s death (no, she was not shot dead in Detroit). False information spreads quickly too: one 2018 study showed that falsehoods are 70% more likely to be retweeted than truthful news.

To combat misinformation, Beard and his team tracked news using media monitoring tools. It also created a four-step process: find, assess, create and target. “We needed a process because there’s so much misinformation out there,” says Beard. “[Misinformation] was messy and constantly churning. The solution was a multiple-step process to identify, analyze and respond to misinformation threats.

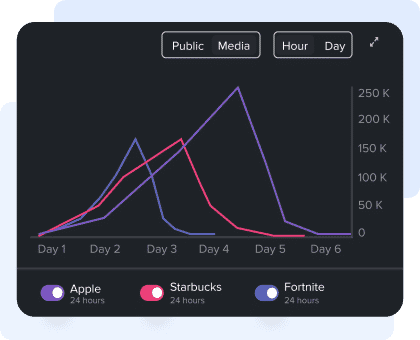

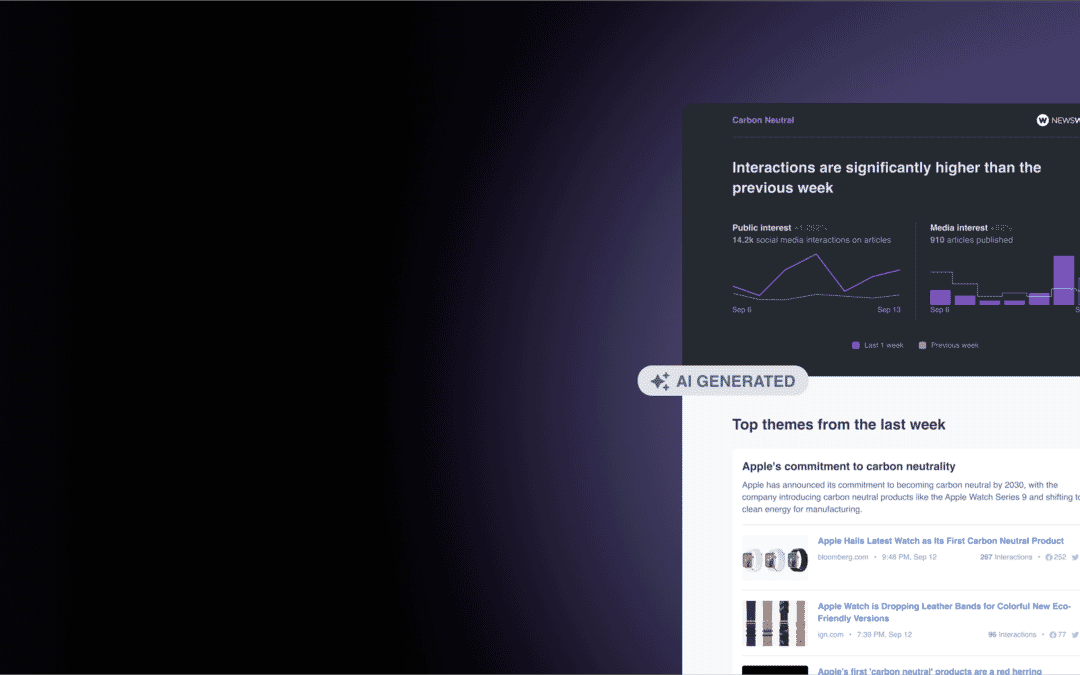

Media monitoring tools such as NewsWhip were essential for the“find” part of the process. The team identified eight topic areas, such as health and “Downing Street,” to track. “You want to make sure you’re getting information about things you care about, not casting too wide a net.”

It used media monitoring tools to search for headlines and social posts and then put that information into spreadsheets for analysts to comb over. It then generated a report every day to see which stories and topics were on the rise and which ones went away.

For the issues gaining traction, and risking damage to the public or government, the team moved into “create” and “target” mode. That meant working against misinformation by directly commenting on news stories, or replying to tweets, with accurate information. It also involved creating social media posts around certain topics or starting full-on communications campaigns.

Pandemic problems

Pre-2020, Beard and the team tracked, on average, seven concerning stories a week, from deliberately misleading crime reporting, to fantastical Brexit disaster narratives But when COVID-19 came, that escalated to about 70 narratives a week, many of which were going viral. “Some of the top stories were getting almost a million interactions, which was more than we’d ever seen before,” says Beard. “With this unprecedented amount of interest, we had to change how we were doing things.”

Making matters worse was that many of these conversations were happening in closed groups, such as on WhatsApp, while people were using advanced technologies to create fake posters that looked as if they were from the U.K.’s National Health Service, along with increasingly believable fake images.

The government’s staff increased as the unit’s workload soared. It also had to start focusing on the most harmful misinformation, adjusting its “find” and “assess” process to involve questions around how much the news could harm people or erode trust in the government.

It changed the way it counteracted misinformation, too, especially since the sites that were telling people to, say, drink hot water to kill COVID-19 claimed the research was coming from real university professors and researchers (it was not). At the time it was extremely difficult for the public to know what was real and what was not about the pandemic. Instead of simply creating new posts with the right information, it included the wrong, original message in its post and then corrected it. “It made it easier to recognize and share,” says Beard.

Overall, the team used five approaches to take down fake news: counter-narratives, amplifying existing content, debunking, using direct rebuttals and launching explainers. The content’s tone was always neutral and focused on the facts. It often worked.

Unfortunately, the misinformation keeps coming, says Beard. With technology making it easier than ever to create a fake news website, and more sophisticated players pushing out falsehoods, it can sometimes feel like a losing battle. Fortunately, he adds, “people’s awareness of misinformation is much higher.”

Many other organizations and companies are dealing with the same issues as the U.K. government. Having a process to combat lies and falsehoods is critical, says Beard, who is now an independent consultant. “Be systematic and decide on your monitoring toolbox,” he explains, adding that people must create a framework early, before disaster comes their way. “Decide what you’re going to do now. Don’t wait for misinformation to happen.”

To read about more organizations working to combat misinformation, read our NewsGuard blog here.